Dear Silicon Valley: Stop saying stupid stuff

“Disruption” isn’t the same as “stupid,” but they sometimes sound similar. At least, they do when uttered by a certain strain of Silicon Valley entrepreneur.

This thought struck me while listening to a Valley exec at an enterprise software conference. He stumbled through PowerPoint (“How do you people use this app? I’m a Keynote guy”), agonized over how he could “possibly get used to Exchange after running his startup on Gmail” (his company had recently been acquired by a large software vendor), and generally made it clear that he had no idea how real companies work.

He lives in a bubble that has drones delivering tacos to those not already subsisting on Soylent. He wants to change enterprise computing, but he clearly has no appreciation for the challenges facing enterprises mired in decades of technical debt.

He is, in other words, either the worst or best person to change the world. (My vote: worst.)

Daydreaming the future

Are you still reading? Why aren’t you out building a bot? Or building apps for self-driving cars? Or doing Something That Matters™?

I suspect it’s because you have a job — one that pays you in real dollars, not the venture money that can subsidize a dream long enough to turn it into reality, but is equally as likely to obscure the hard steps necessary to getting companies to pay for your product.

Those “real dollars,” as noted, require real customers paying real money. It’s not surprising, therefore, that people like Red Hat’s Gordon Haff grow frustrated with the Valley’s preoccupation with myths: “Will people just stop talking as if the fully autonomous vehicle thing is going to be here in a few years?”

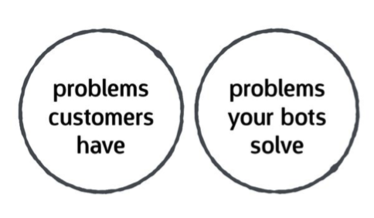

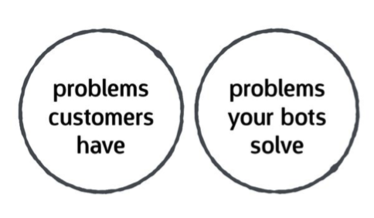

Or take David Bland’s Venn diagram to describe the current value of bots:

David J. Bland

David J. Bland

This isn’t to suggest that autonomous cars, AI, and other Silicon Valley dreams aren’t important. They are. But they’re also not what real customers buy today.

Head in the clouds

What about topics that are closer to reality, like cloud computing? I’m an ardent advocate for public cloud providers like Amazon Web Services and Microsoft Azure, and I see a future where most workloads will move there. But let’s face facts: Most workloads still run in your enterprise’s musty data centers, and they will for some time. Public cloud services are booming, yet they’re still a tiny fraction of overall IT spending, according to IDC. That’s not going to change anytime soon.

Even where enterprises want to “do cloud,” they’ll get there through an inefficient foray into private cloud computing. Why? Because it’s much easier to change technology than culture. “Own your hardware” still permeates enterprise IT and will for years.

Any startup entrepreneur that comes in waving her arms, telling a CIO or a line-of-business exec that they need to move everything right now to a cloud model (whether SaaS, PaaS, or IaaS) should be laughed out of the building. It’s not that easy — partly due to simple cost calculations. As Forrester has found, the cost of a cloud service itself (10 percent of total cost) pales in comparison to the labor costs (50 percent) associated with migrating legacy apps to new-school platforms.

The cloud’s “get out of jail free” card, in other words, isn’t free, and pretending that it’s as easy as spinning up an instance on EC2 is simply not credible, like the speaker at the conference. Yes, there is a la-la land where everything runs in the cloud, but it’s way out on the horizon for most enterprises.

Getting real

But why stop with the cloud? Every big trend looms larger in the press than in the CIO’s office. Despite the furor over big data, for example, Hadoop adoption was still “fairly anemic” as recently as last year, according to Gartner.

In my current world of mobile, aspirations to transform business for mobile experiences are far grander than the budgets applied to make those dreams real. As I’ve written before, there’s a huge disconnect between enterprises that claim to have a mobile strategy and those with an actual mobile budget. It’s one thing to proclaim the mobile era, but it’s another to deliver mobile solutions the average enterprise will actually use.

The list goes on.

Yes, the comfortable world of desktops, data centers, and other enterprise relics is being disrupted. But it’s not happening as fast as we think, and the spoils will often go to the very dinosaurs that impede progress, because those are the vendors already set up in financial accounting systems. It’s not a Silicon Valley fairy tale, but it’s probably closer to reality.

Source: InfoWorld Big Data